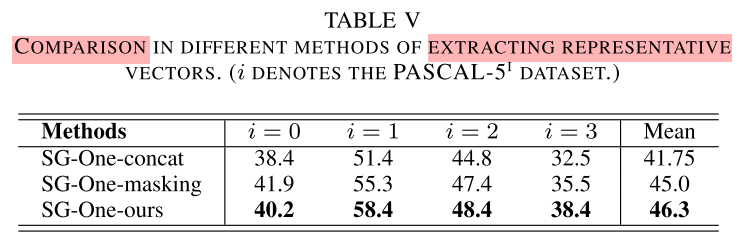

SG-One(SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation)[1] adopt a masked average pooling strategy for producing the

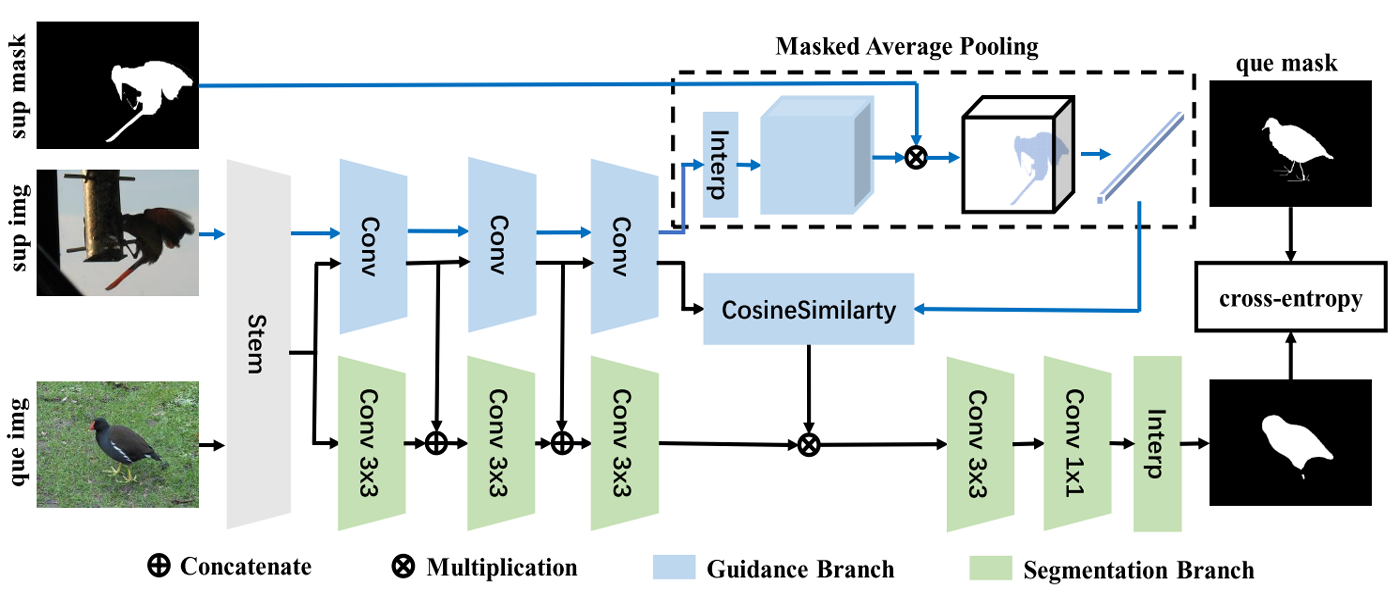

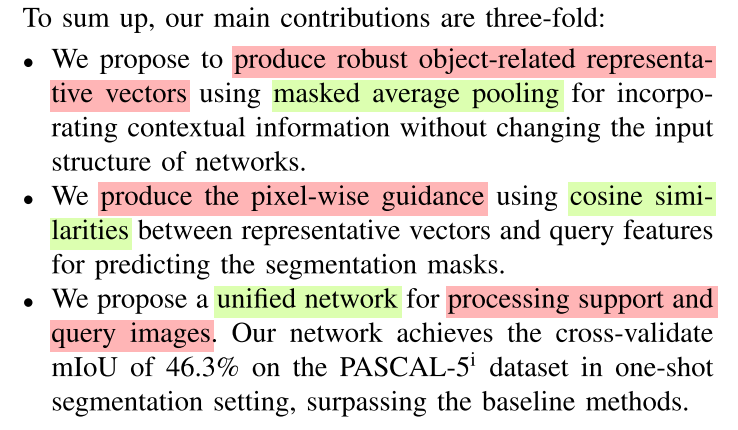

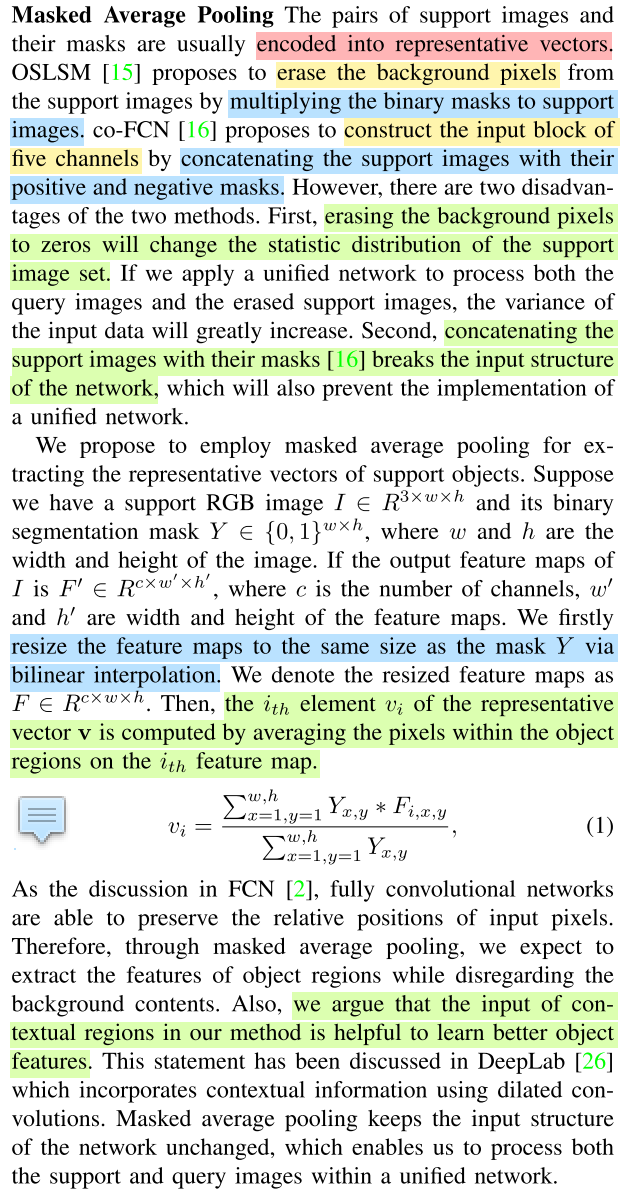

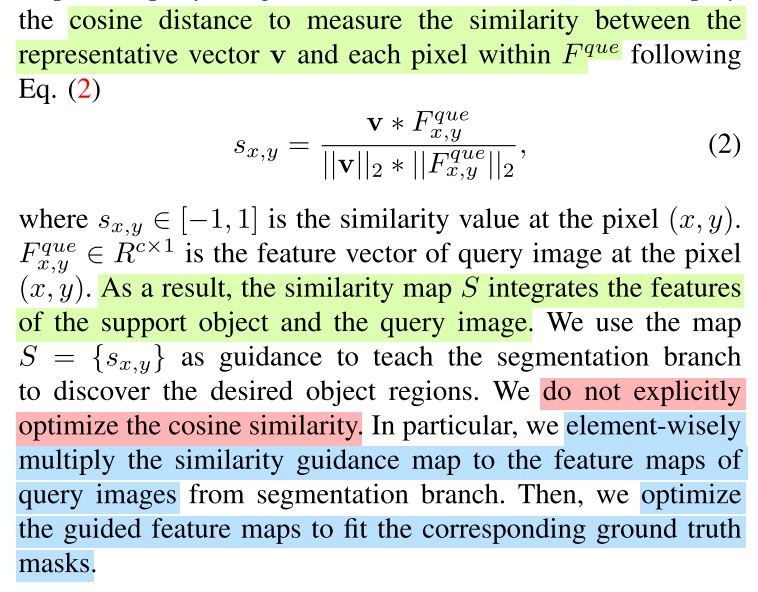

guidance features, then leverage the cosine similarity to build therelationship. There are some details of reading and implementing it.

Contents

Paper & Code & note

Paper: SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation(arXiv 2018 / TCYB 2020 paper)

Code: PyTorch

Note: Mendeley

Paper

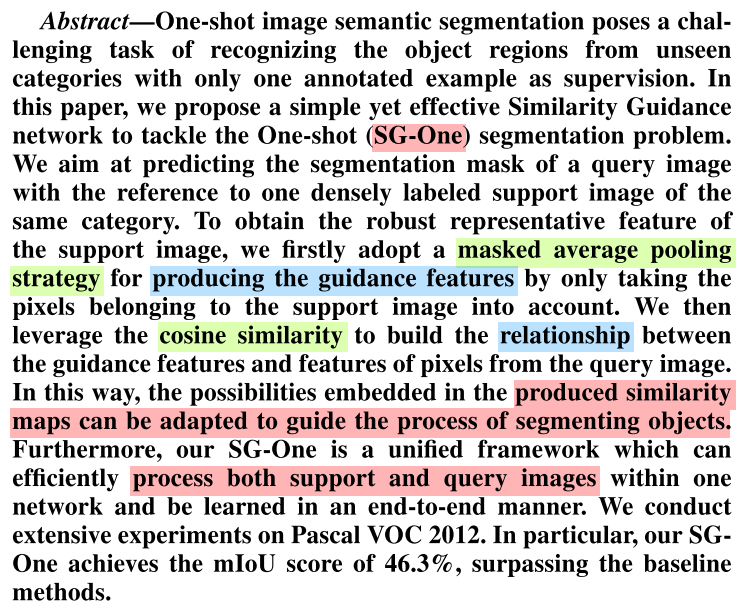

Abstract

Problem Description

Current existing methods are all based on the Siamese framework, that is, a pair of

parallel networks is trainedfor extracting the features of labeled support images and query images.

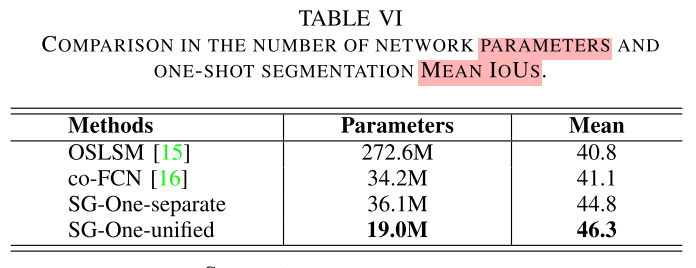

- The parameters of using the two parallel networks are redundant, which is prone to

overfittingand leading to the waste ofcomputational resources. Combining the featuresof support and query images by mere multiplication is inadequate for guiding the query network to learn high-quality segmentation masks.

Problem Solution

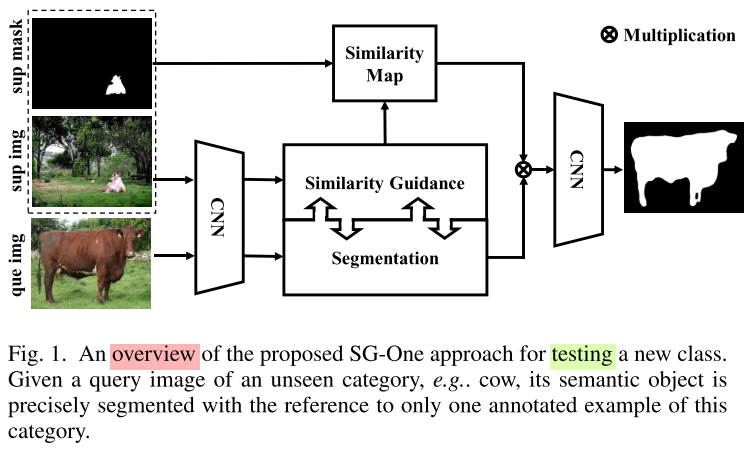

Conceptual Understanding

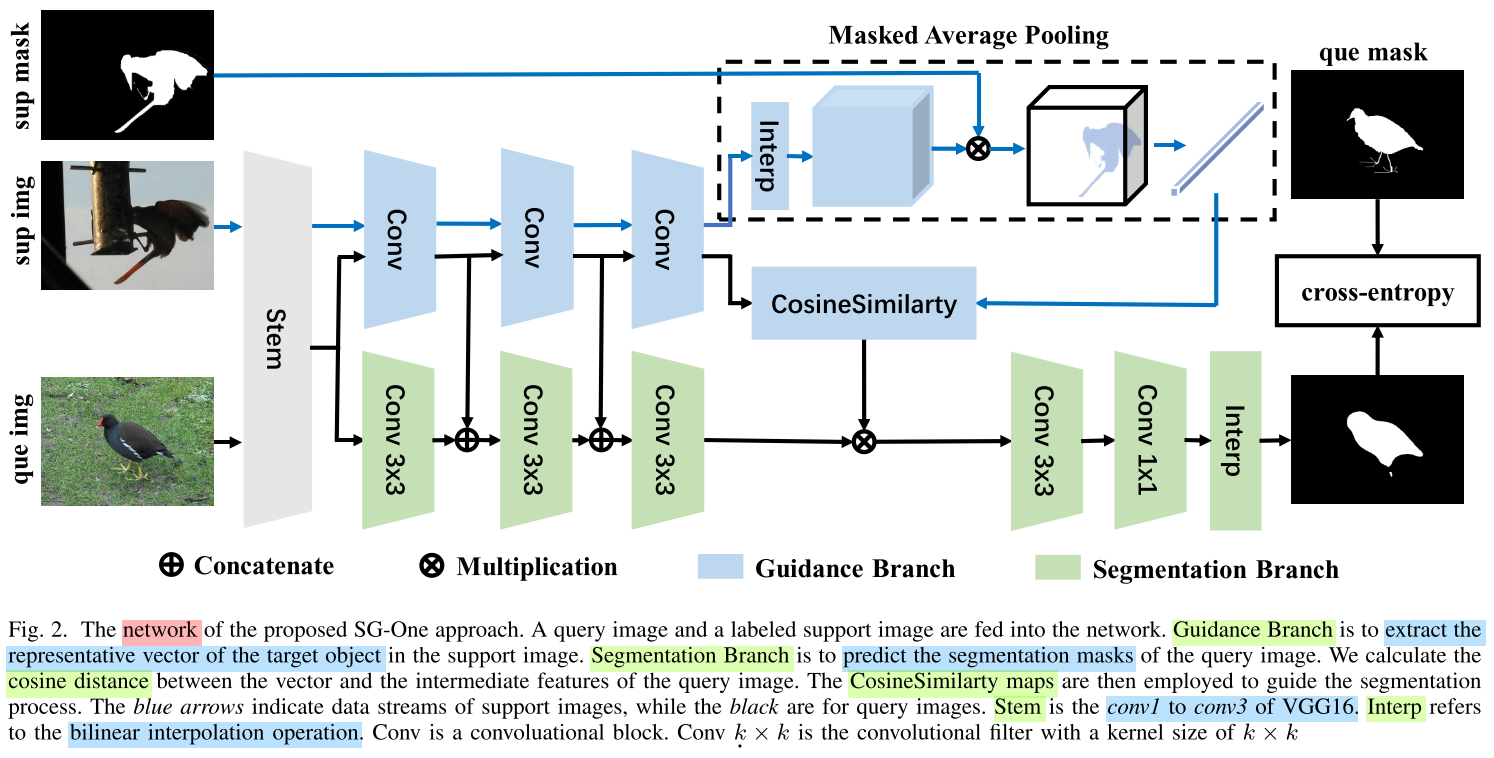

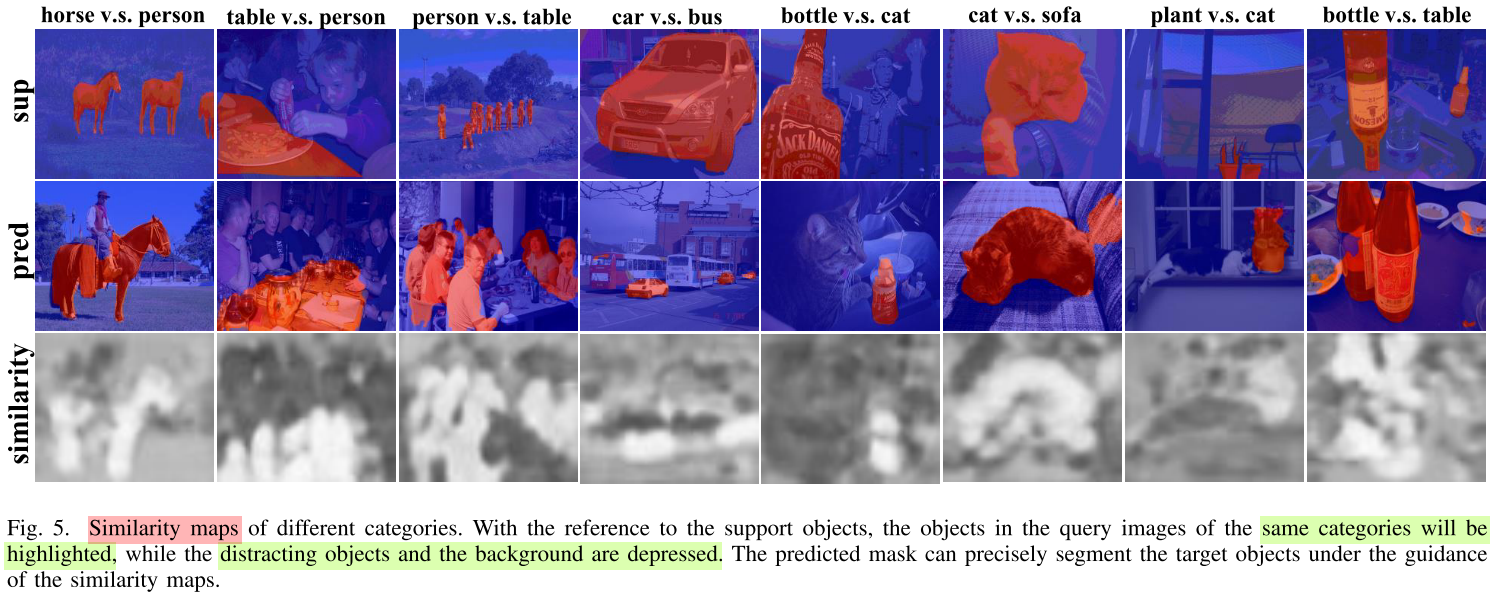

- Similarity Guidance branch: The extracted representative vectors of support images are expected to contain the

high-level semantic features of a specific object. - Segmentation branch: Through the concatenation, Segmentation Branch can borrow features from the paralleling branch, and these two branches can

communicate information during the forward and backward stages.

Core Conception

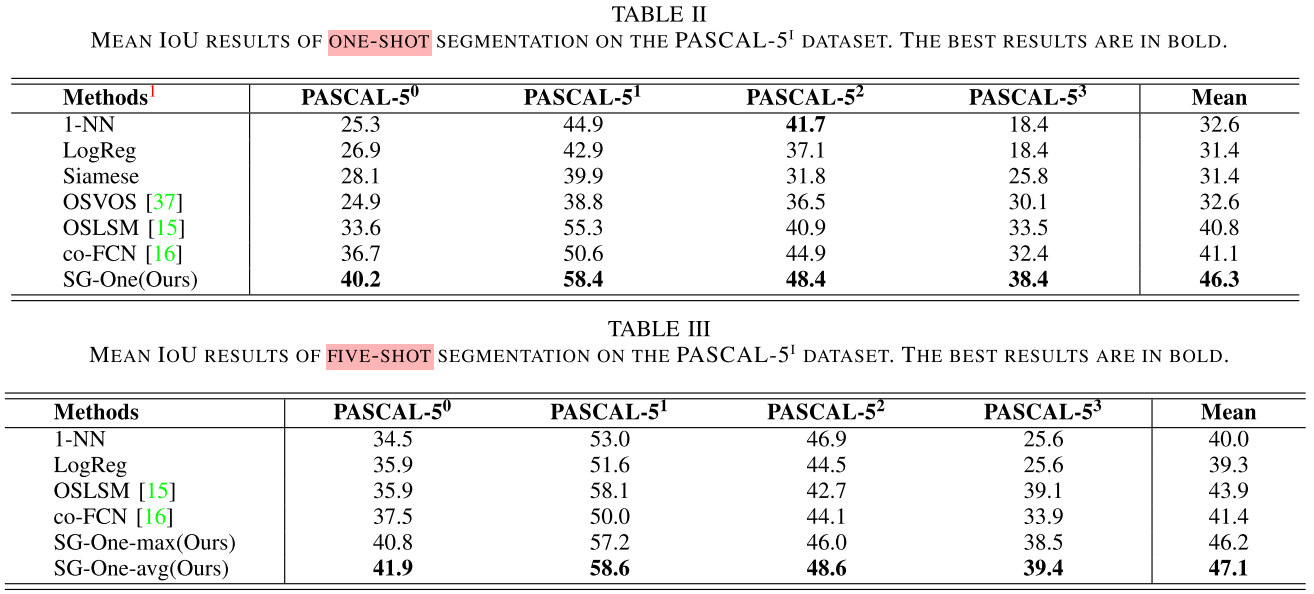

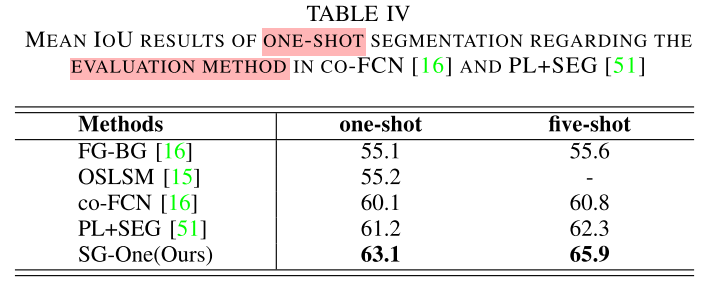

Experiments

Code

[Updating]

Note

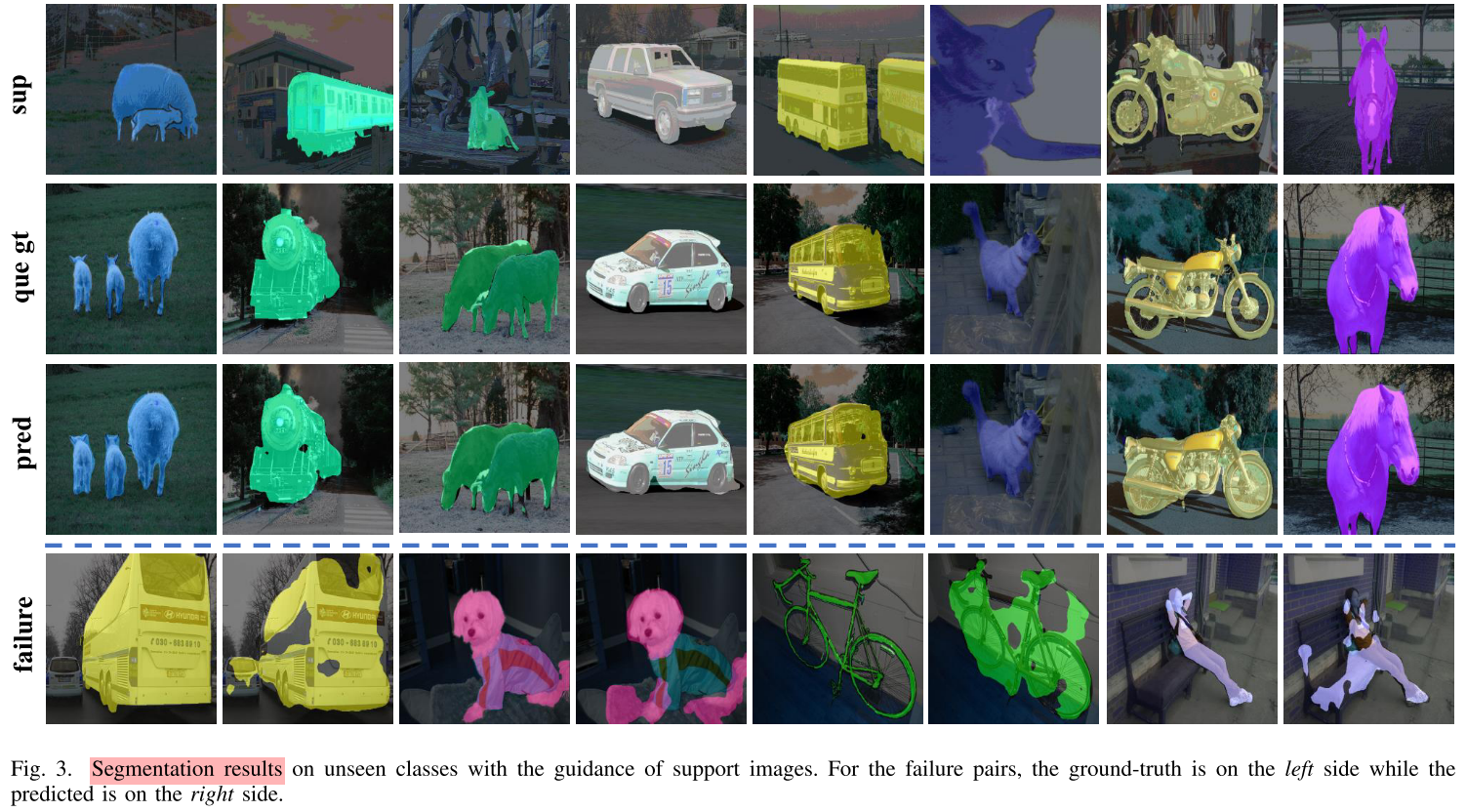

- The latent distributions between the training classes and testing classes do not align, which

prevents us from obtaining better features for input images. - The predicted masks some-times can only cover part of the target regions and may include some background noises if the target object is too similar to the background.

References

[1] Zhang X, Wei Y, Yang Y, et al. Sg-one: Similarity guidance network for one-shot semantic segmentation[J]. IEEE Transactions on Cybernetics, 2020.

[2] SG-One. https://github.com/xiaomengyc/SG-One.