JRMOT[1] is a novel 3D MOT system that integrates information from

2D RGB imagesand3D point cloudsinto a real-time performing framework. There are some details of reading and implementing it.

Contents

Paper & Code & note

Paper: JRMOT: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset(arXiv 2020 paper)

Code: [Pytorch][Updating]

Note: JRMOT

Paper

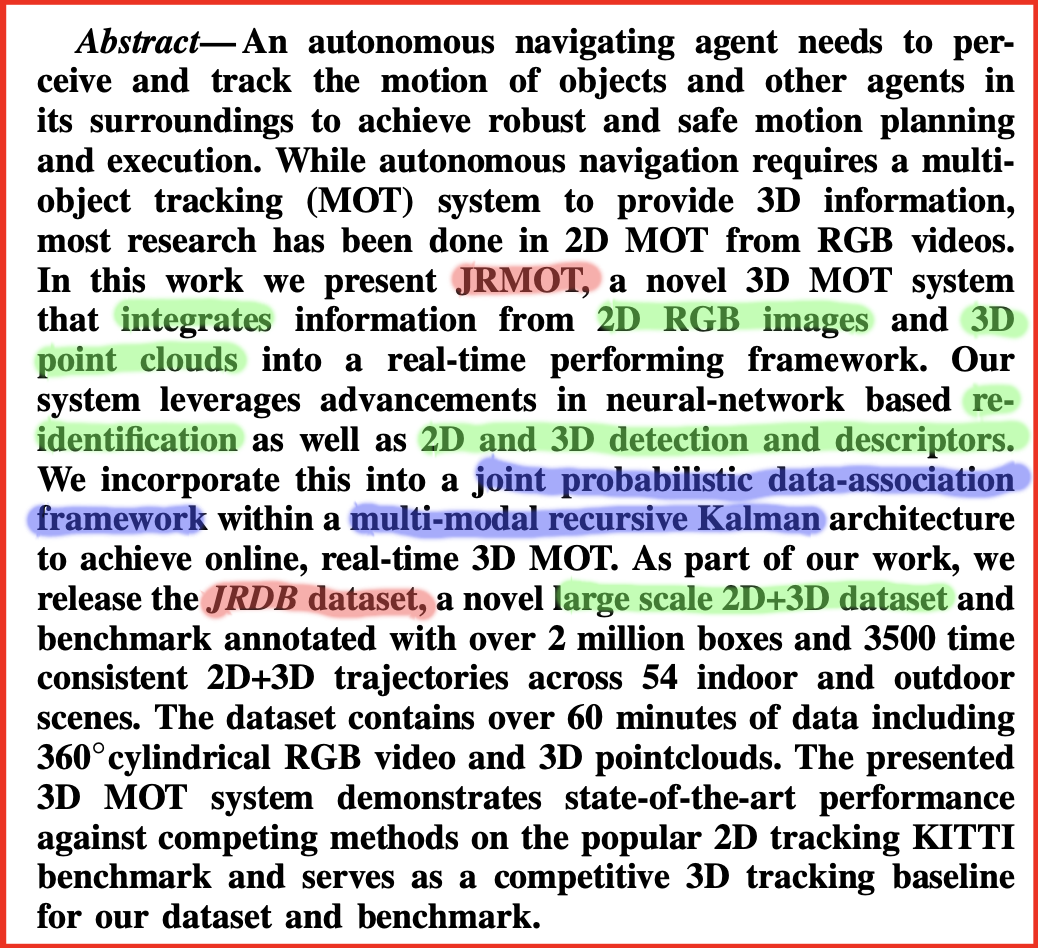

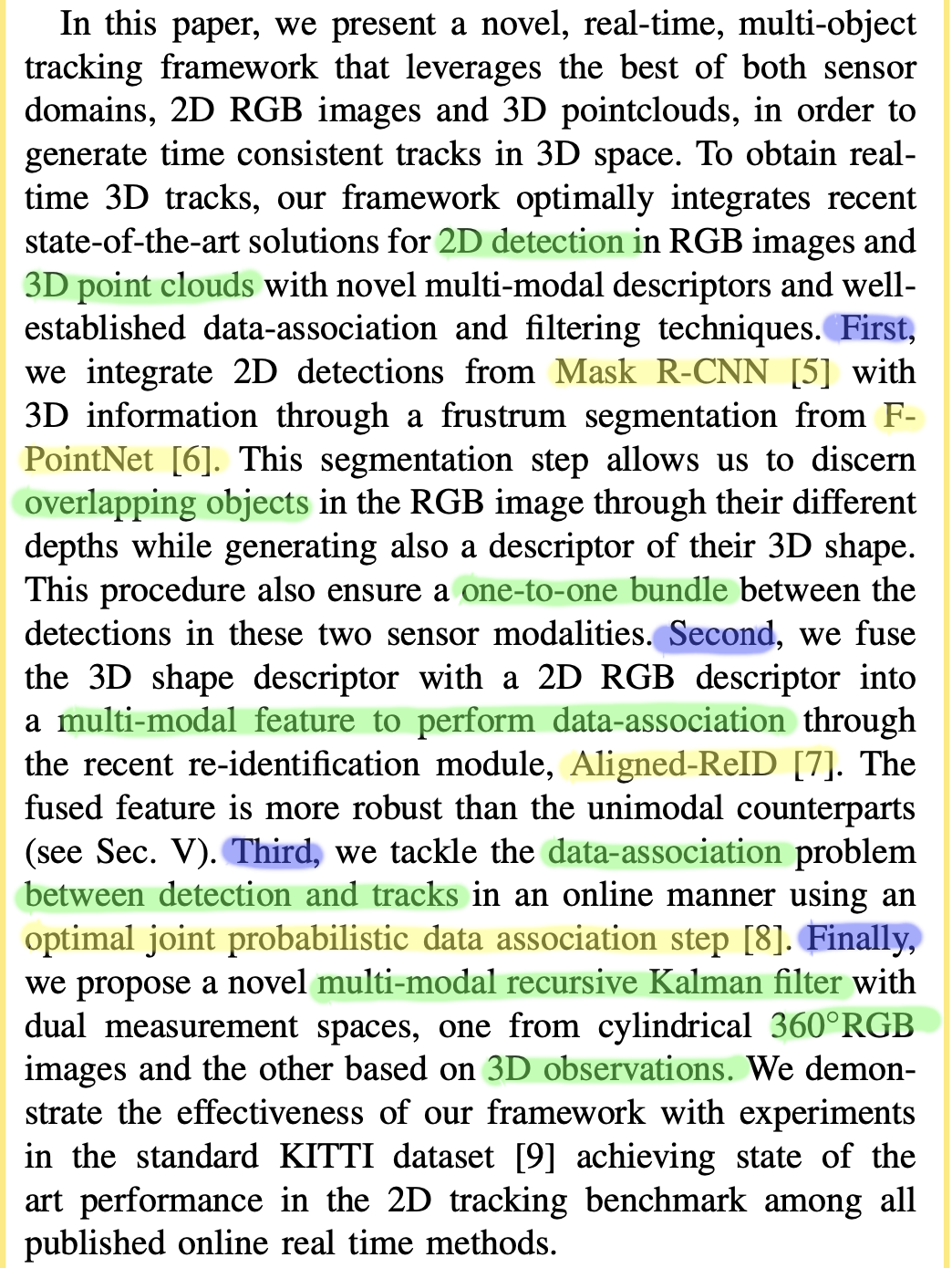

Abstract

- JRMOT is a novel 3D MOT system that integrates information from 2D RGB images and 3D point clouds into a real-time performing framework.

- They also released the JRDB dataset, which is a novel large scale 2D+3D dataset.

- It demonstrates state-of-the-art performance against competing methods on the popular

2D trackingKITTI benchmark and serves as a competitive3D trackingbaseline for our dataset and benchmark.

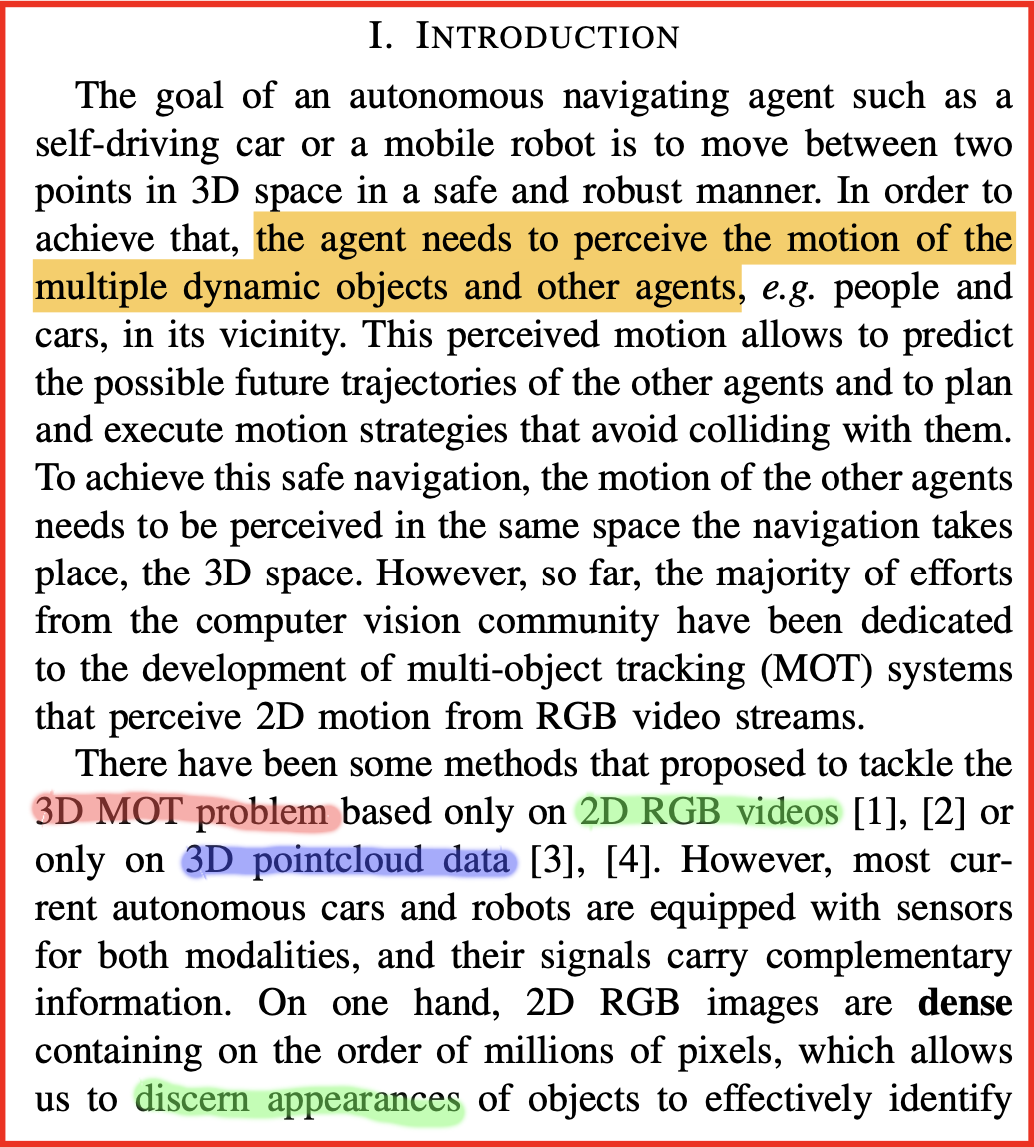

Problem Description

MOT: the agent needs to perceive the motion of the multiple dynamic objects and other agents.

Reccent approaches:: perceive2D motionfrom RGB video streams.

Problem Solution

- It integrates 2D detection from

Mask R-CNNand 3D information fromF-PointNet.- It fuses the 3D shape descriptor with a 2D RGB descriptor through

Aligned-ReID.- It uses optimal joint probabilistic

data associationstep.- A novel multi-modal

recursive Kalman filterwas proposed.

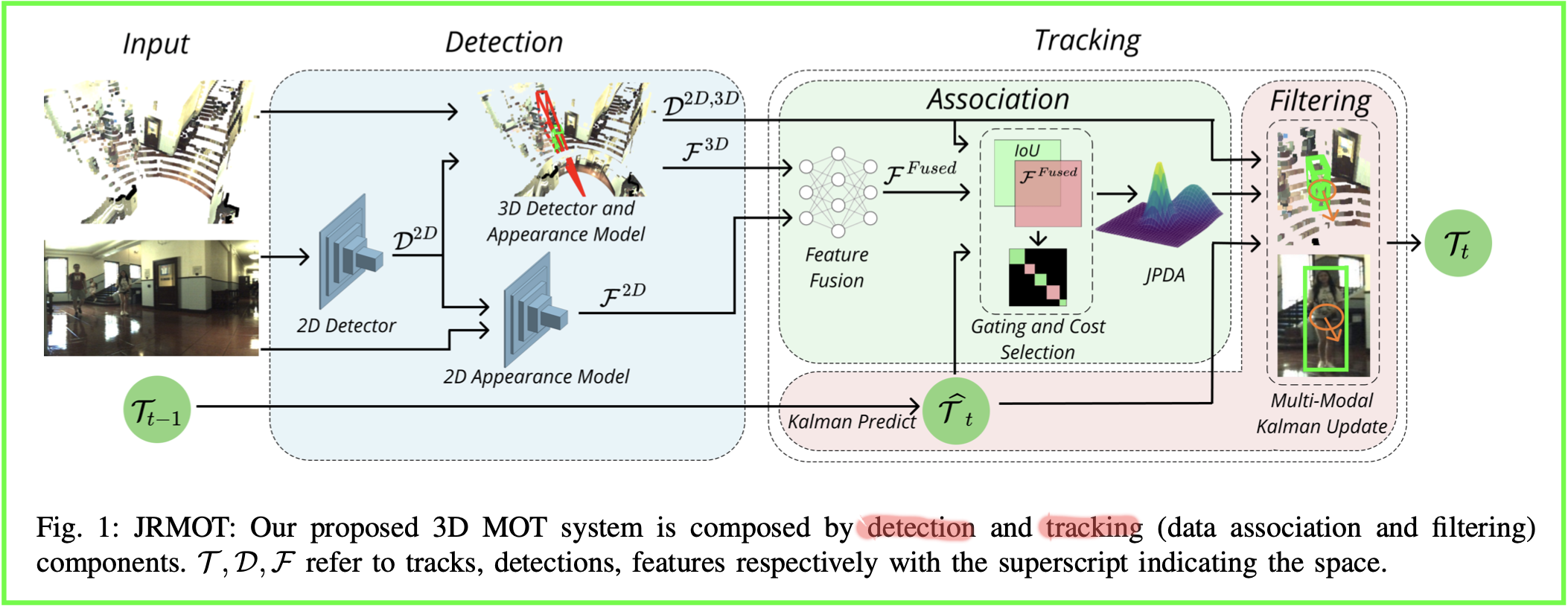

Conceptual Understanding

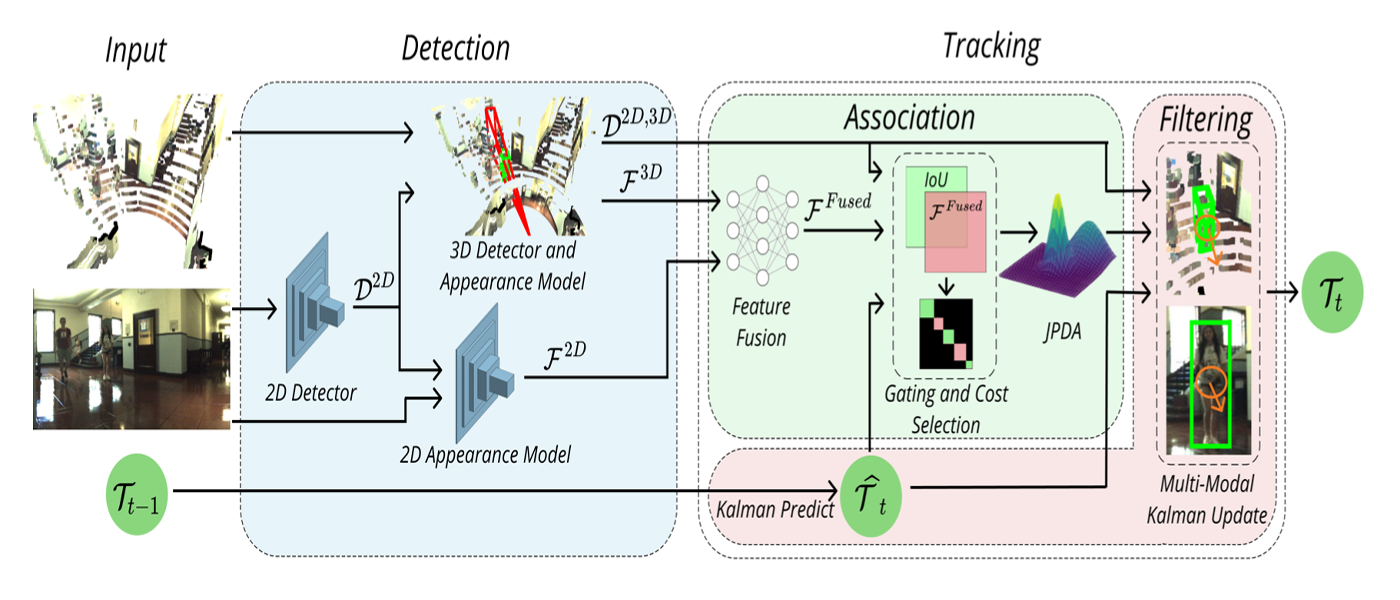

It shows all components of the JRMOT, and workflow as below.

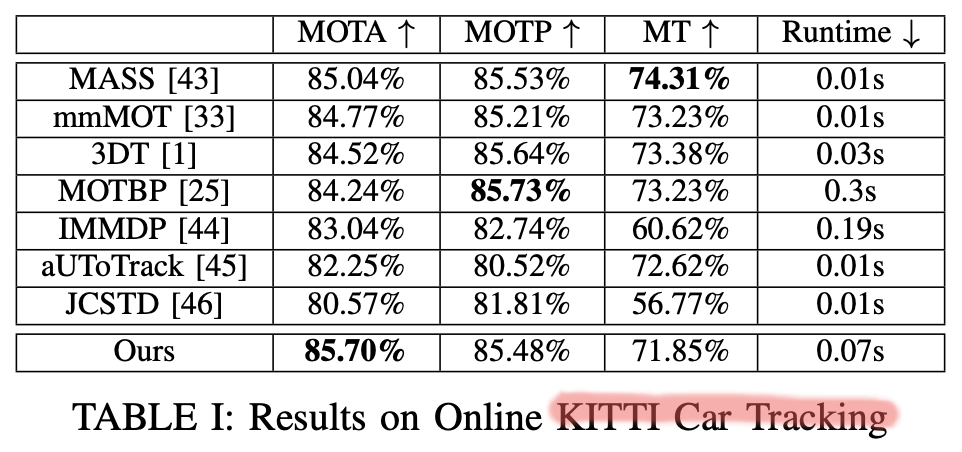

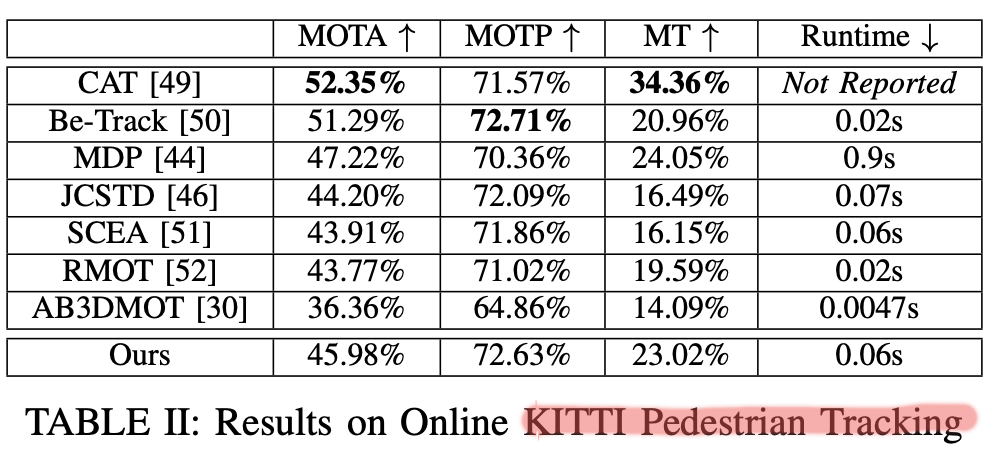

Experiments

It results on online KITTI Car and Pedestrian Tracking, and gets SOTA performance.

Code

[Updating]

Note

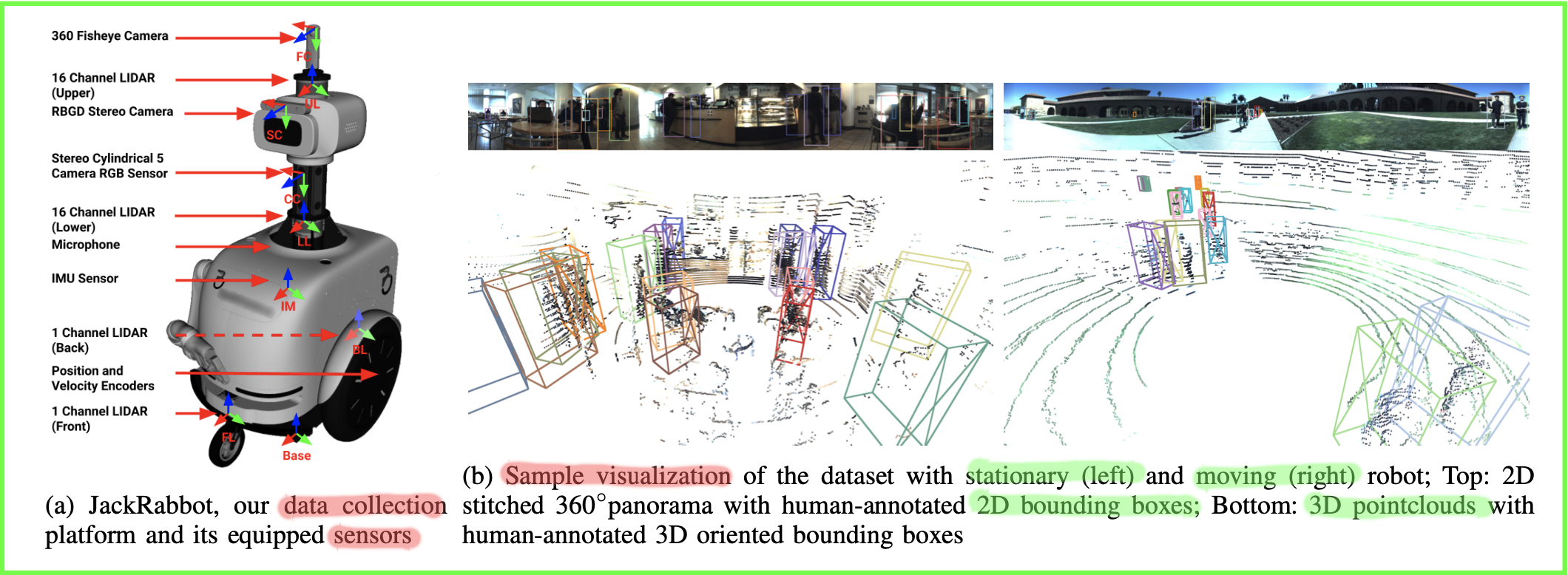

It shows data collection platform and sample visualization of the dataset.

References

- Shenoi A, Patel M, Gwak J Y, et al. JRMOT: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset[J]. arXiv preprint arXiv:2002.08397, 2020.