ResNet[1] is used to classify images with deep residual learning. There are some details of reading and implementing it.

Contents

Paper & Code & note

Paper: [Deep Residual Learning for Image Recognition(CVPR 2016 paper)

Code: PyTorch

Note: ResNet

Paper

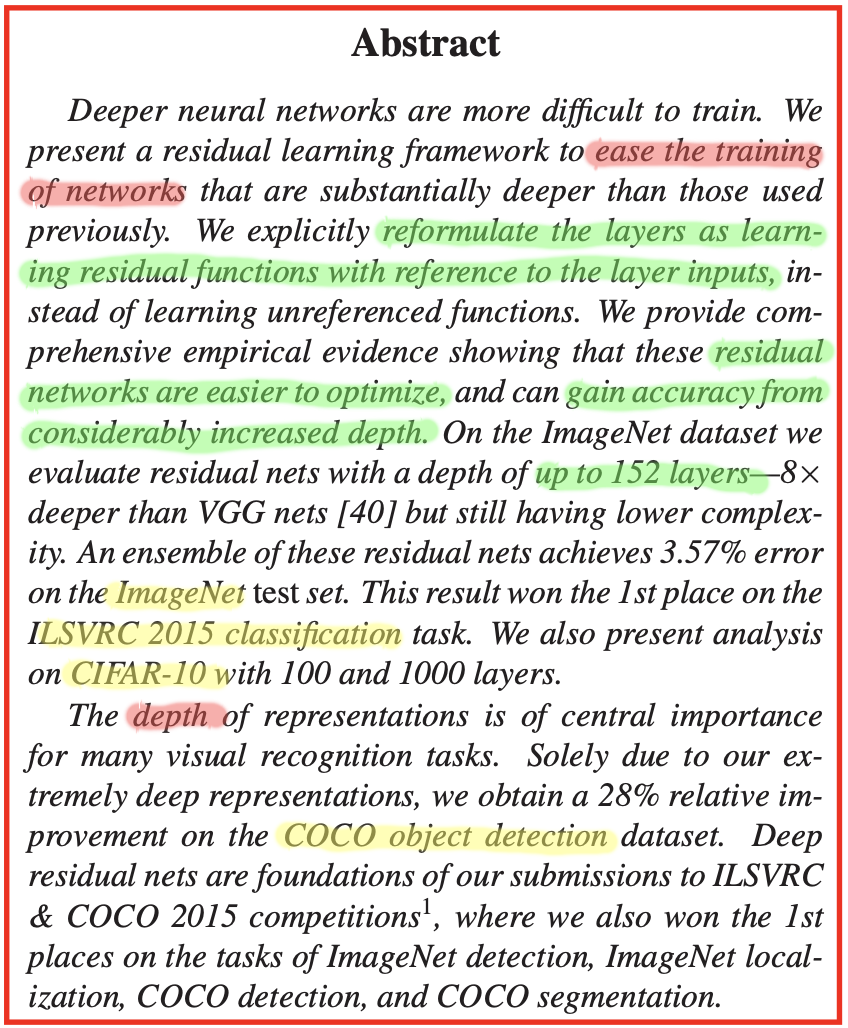

Abstract

As abstract of the paper, their work mainly present a residual learning framework named ResNet, which based on the residual building block for classification and detection.

- It reformulate the layers as

learning residual functionswith reference to the layer inputs.- Residual networks are easier to

optimize, and can gainaccuracyfrom considerably increased depth.

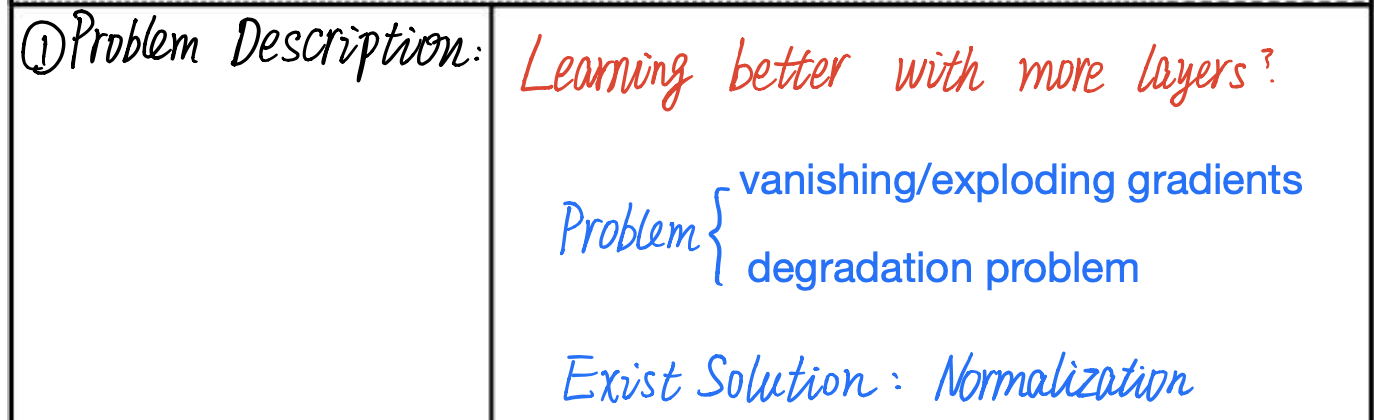

Problem Description

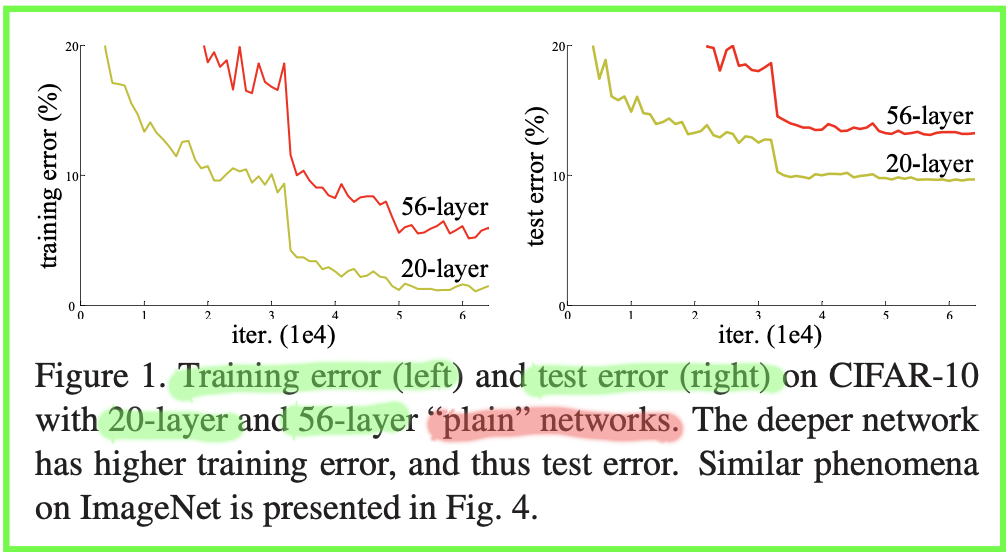

Driven by the significance of depth, a question arises: Is learning better networks as easy as stacking more layers?

It shows

higher errorwithdeeper network.

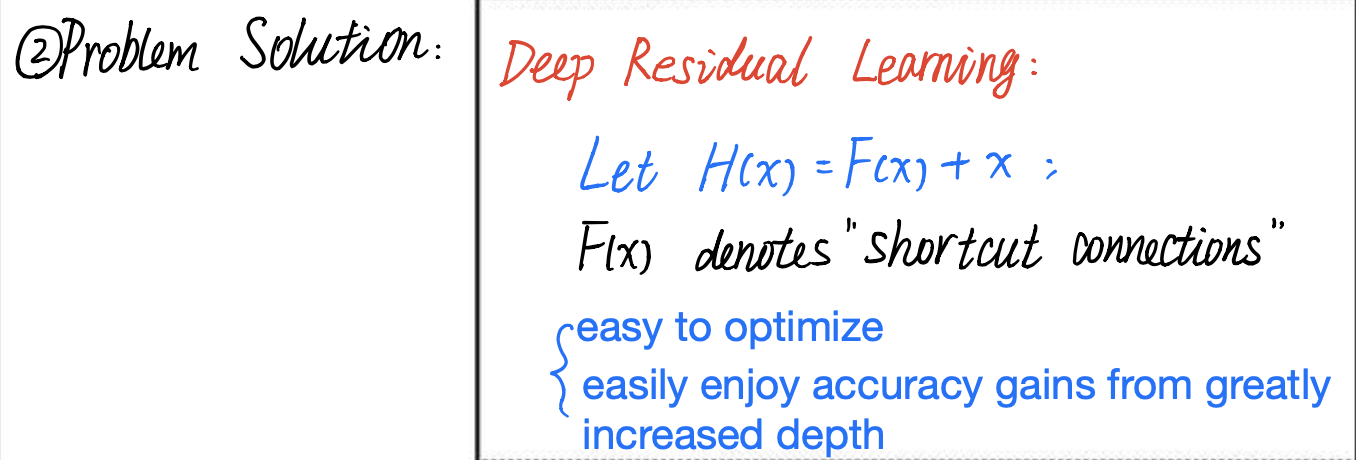

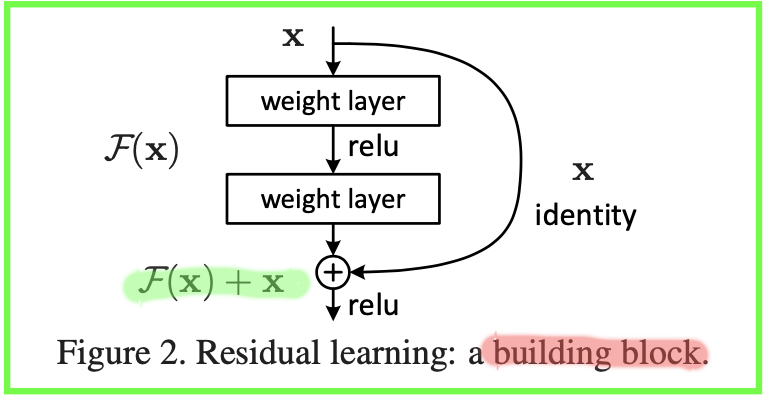

Problem Solution

- Intuitively, the residual learning needs

less to learn, because the residual is generally smaller. Therefore, the learning difficulty is smaller.- Mathematically speaking, the gradients will not be vanished due to the

shortcut connection, that is why residual is easier to learn.

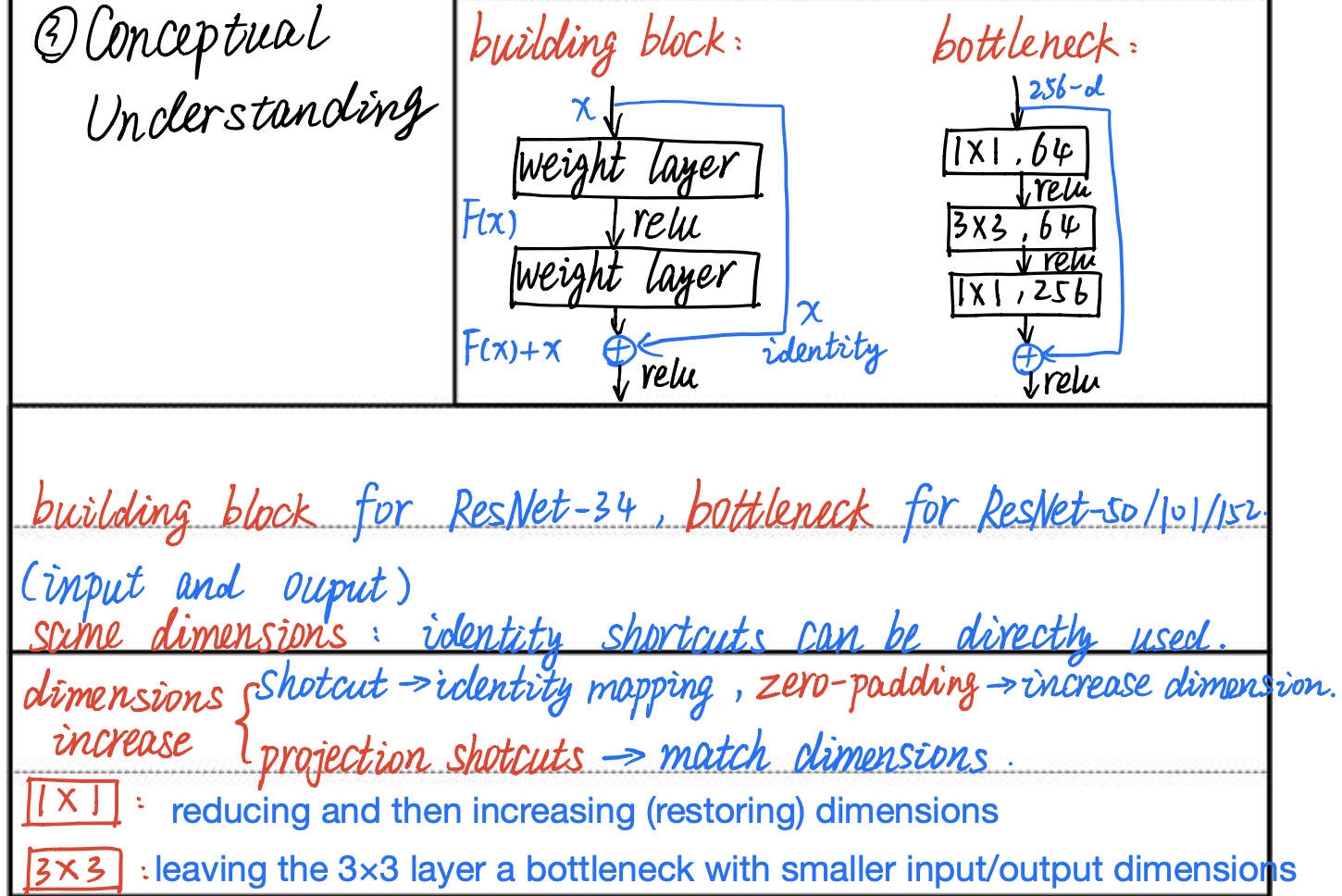

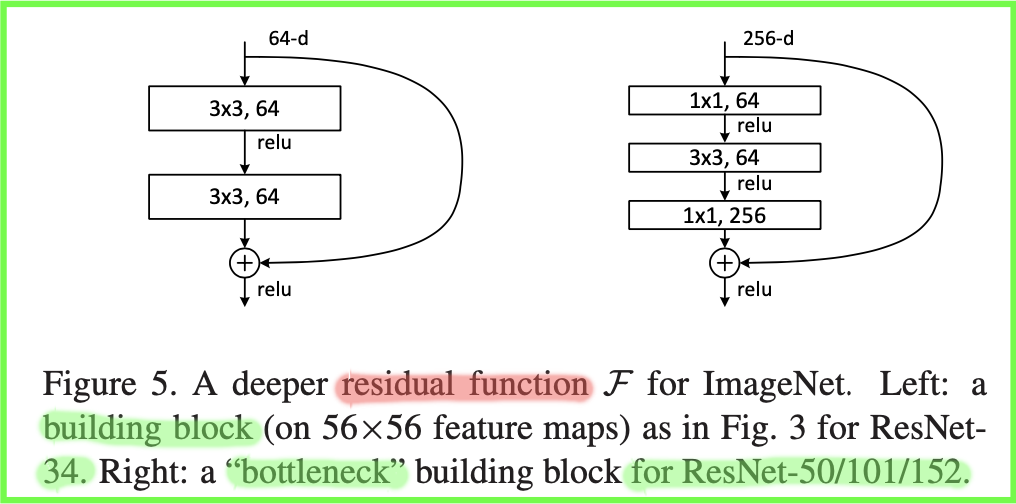

Conceptual Understanding

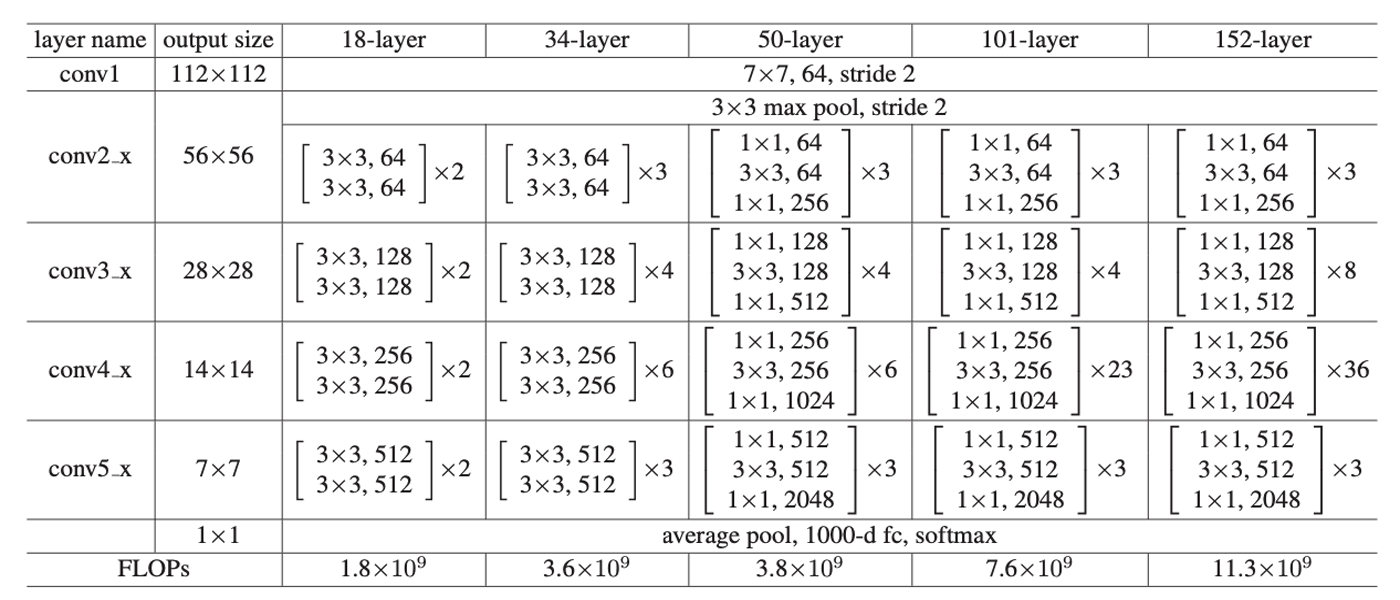

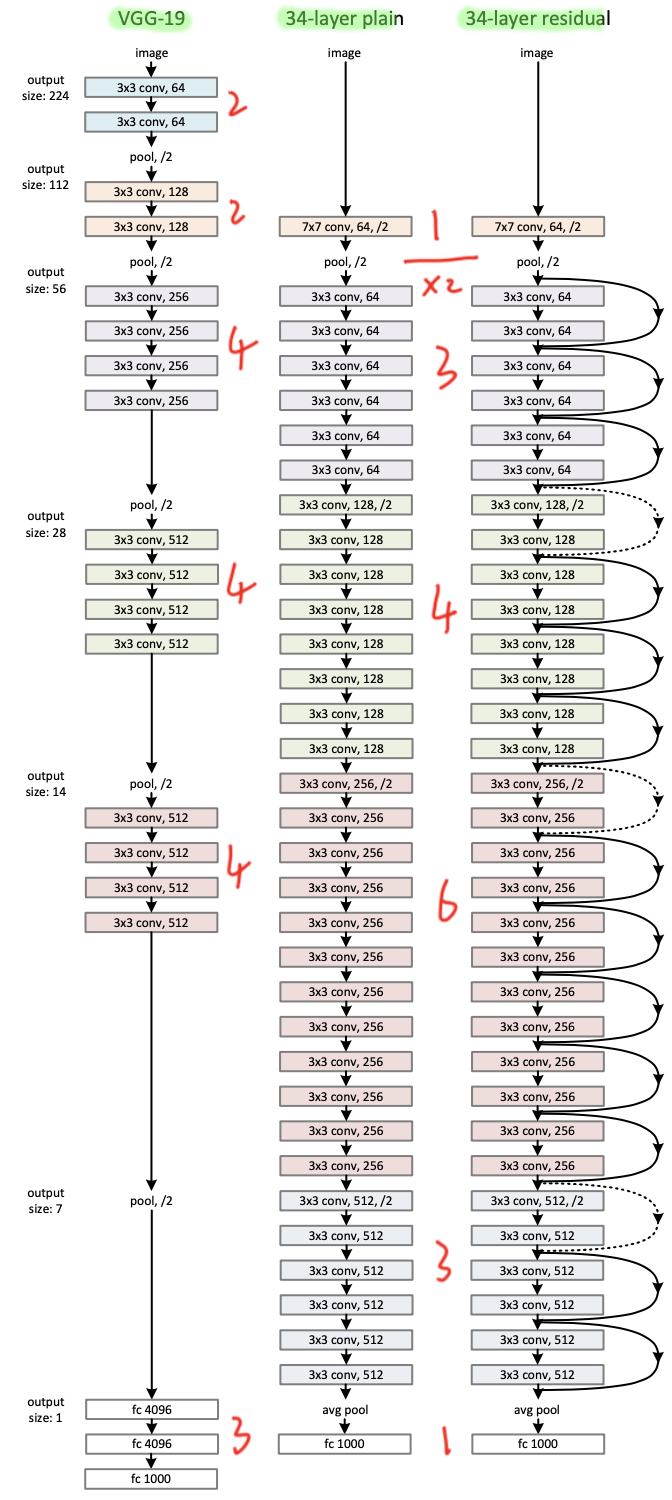

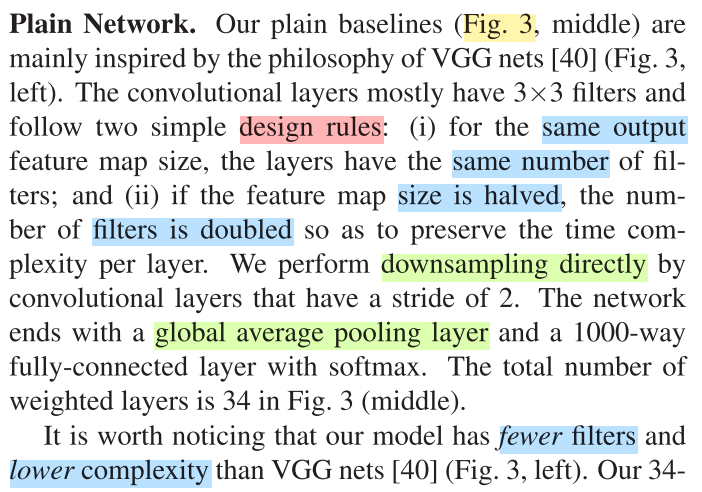

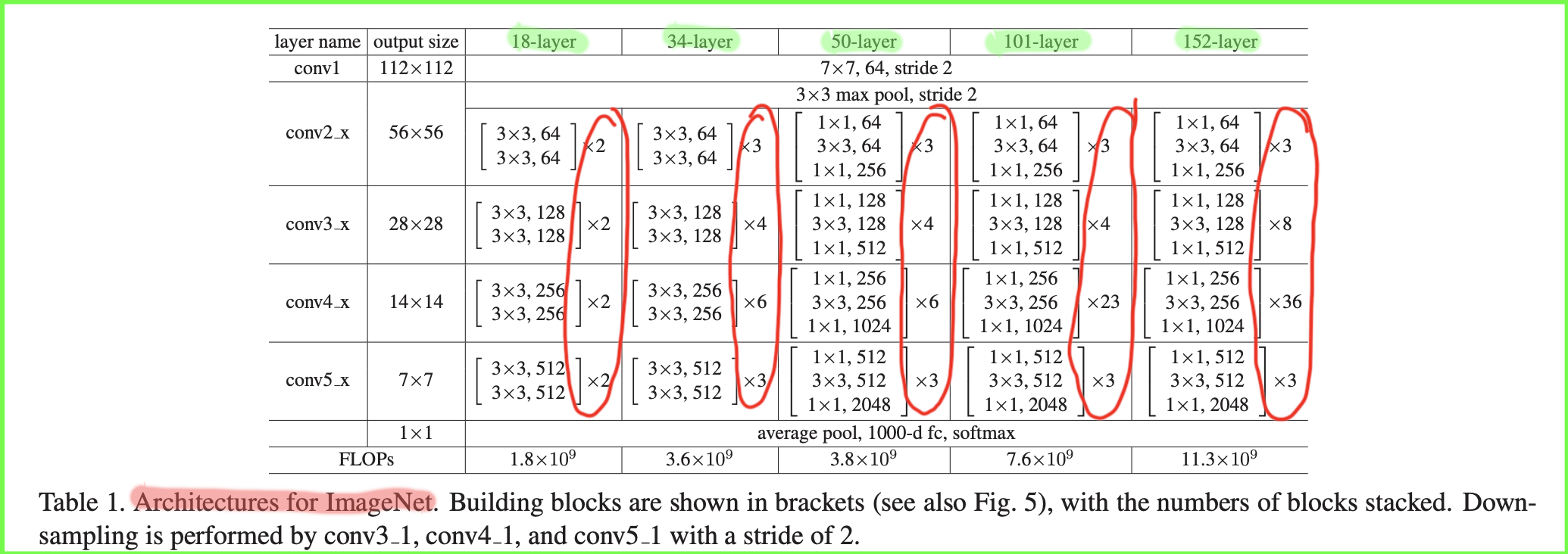

- It presents two version of building block for ResNet, including

BasicBlockandBottleneck.- It describes the choise about different dimensions, as to input and output with

same dimensionsordimensions increase.- It shows the function of

1x1and3x3convolution layers works.

Core Concept

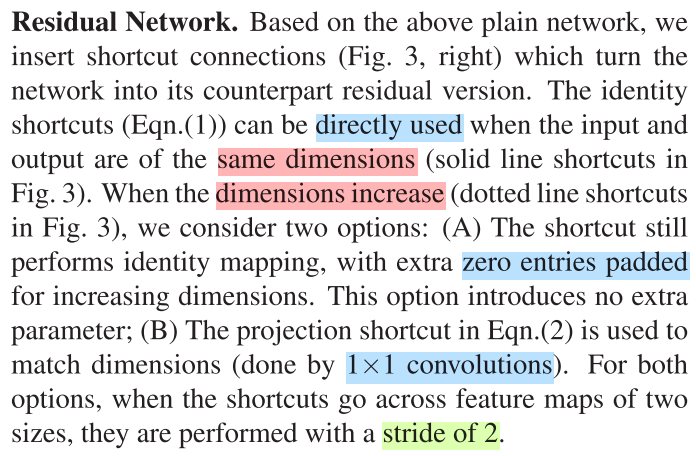

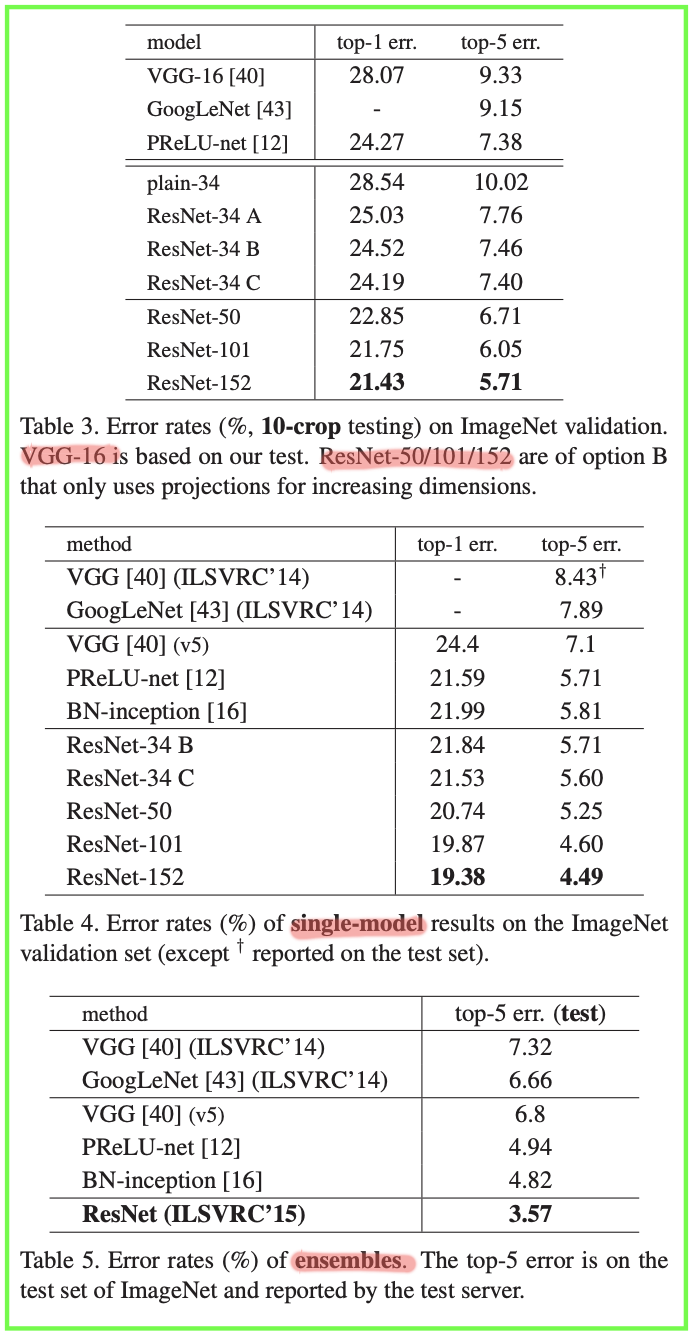

Experiments

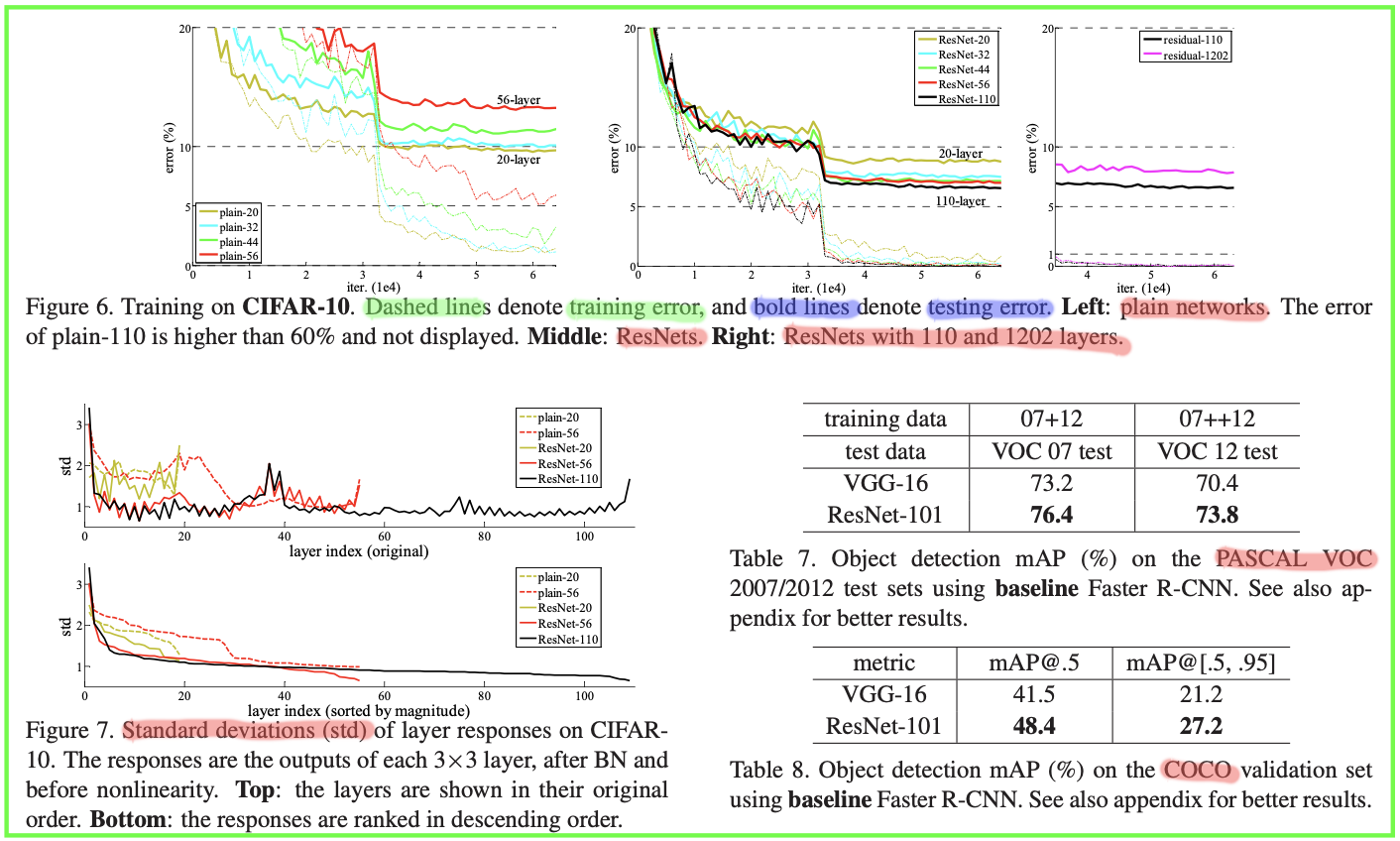

It includes image classification datasets ImageNet, CIFAR-10, and objection detection datasets PASCAL VOC, COCO.

Code

The complete code can be found in [ResNet][2].

Details of implementation

Convolution layer

def conv3x3(in_planes, out_planes, stride=1, groups=1, dilation=1): |

Building block

class BasicBlock(nn.Module): |

class Bottleneck(nn.Module): |

ResNet

class ResNet(nn.Module): |

Pretrain

def _resnet(arch, block, layers, pretrained, progress, **kwargs): |

Different layer

def resnet18(pretrained=False, progress=True, **kwargs): |

Note

More details about code implementation can be found in [3], [4].

References

[1] He, Kaiming, et al. “Deep residual learning for image recognition.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

[2] ResNet. https://github.com/Gojay001/DeepLearning-pwcn/tree/master/Classification/ResNet/Code

[3] Darkeyers. ResNet implementaion by Pytorch official. https://blog.csdn.net/darkeyers/article/details/90475475

[4] Little teenager. CNN model you have to know: ResNet. https://zhuanlan.zhihu.com/p/31852747