There are some details of reading and implementing the Network In Network for image classification.

Contents

Paper & Code & note

Paper: Network In Network(arXiv 2013 paper)

Code: PyTorch

Note: NIN

Paper

Abstract

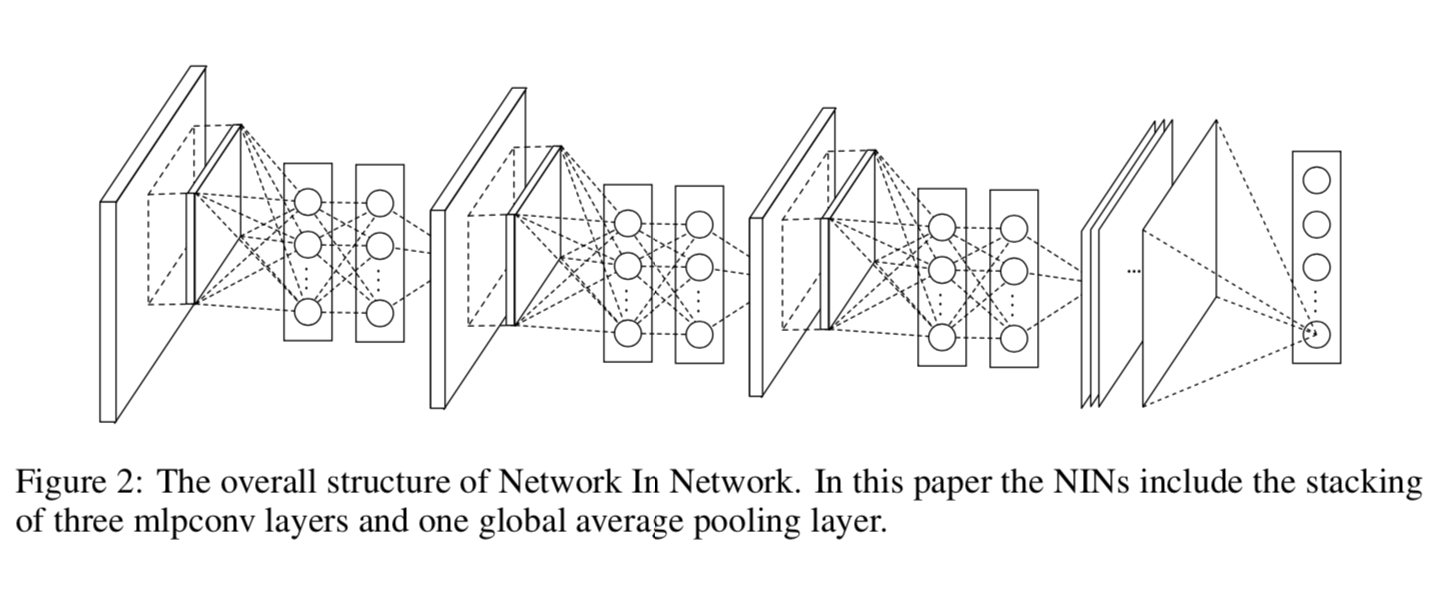

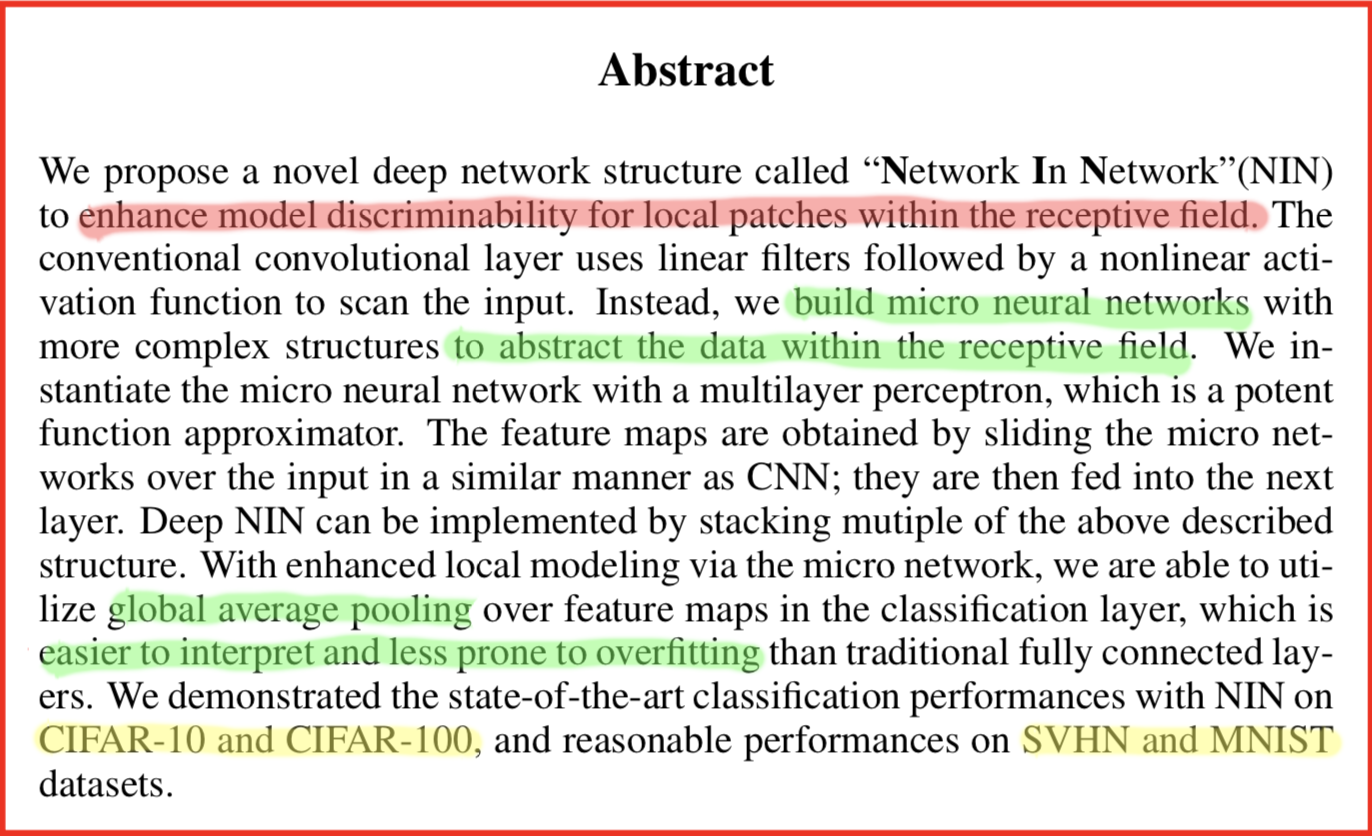

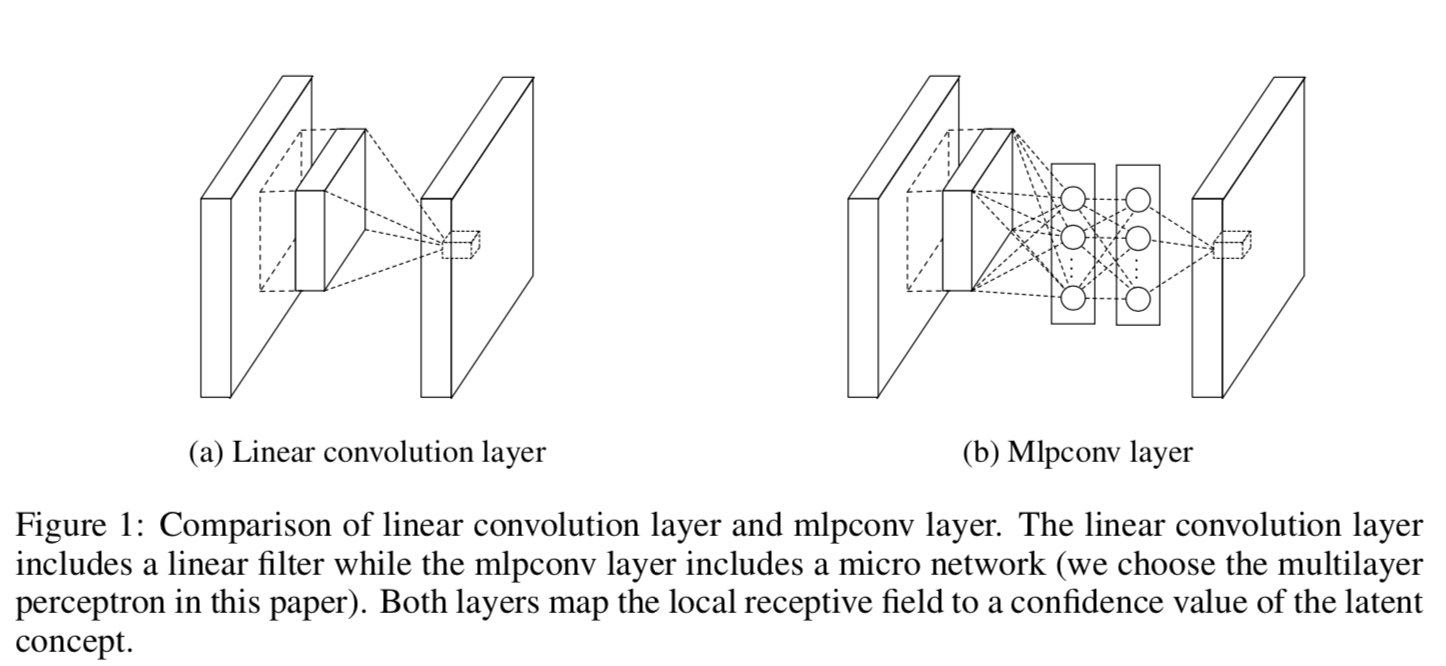

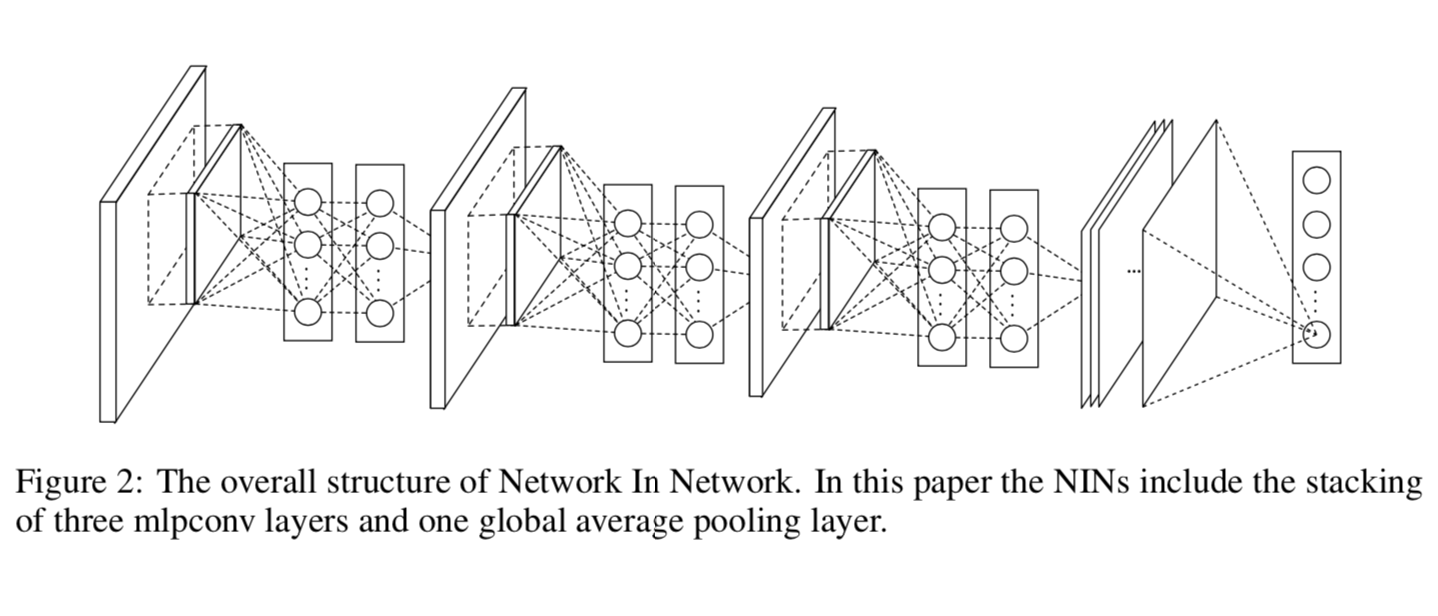

As abstract of the paper, their work mainly build a “micro network” called Network In Network (NIN) to replace traditional Convolutional Nerual Network(CNNs) and utilize global average pooling (GAP) instead of fully-connected layer(FC) to classify images.

- build

micro neural networkswith more complex structures to abstract the data within the receptive field.- utilize

global average poolingover feature maps in the classification layer, which is easier to interpret and less prone to overfitting than traditional fully connected layers.

Problem Description

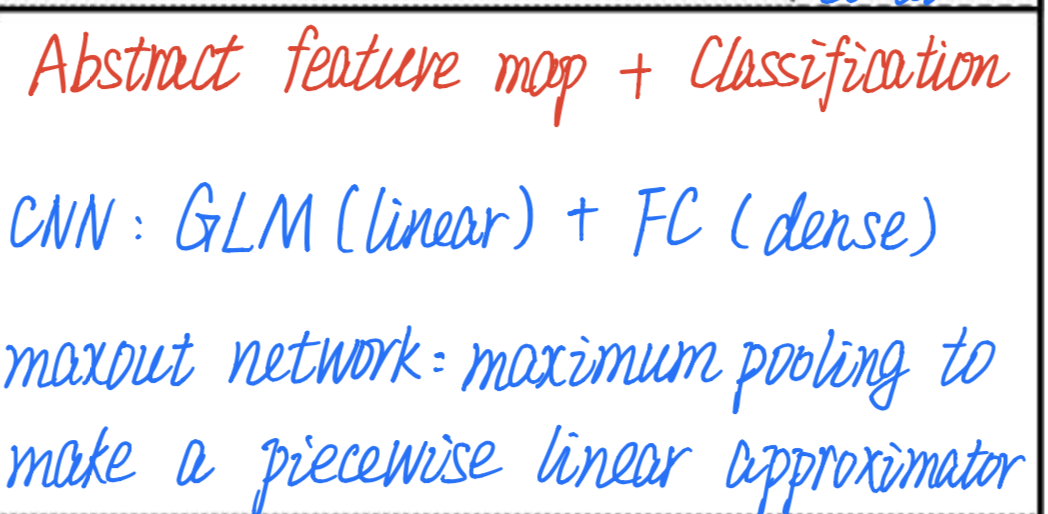

It shows the steps of classifing images as well as describes the traditional and state-of-the-art methods.

Problem Solution

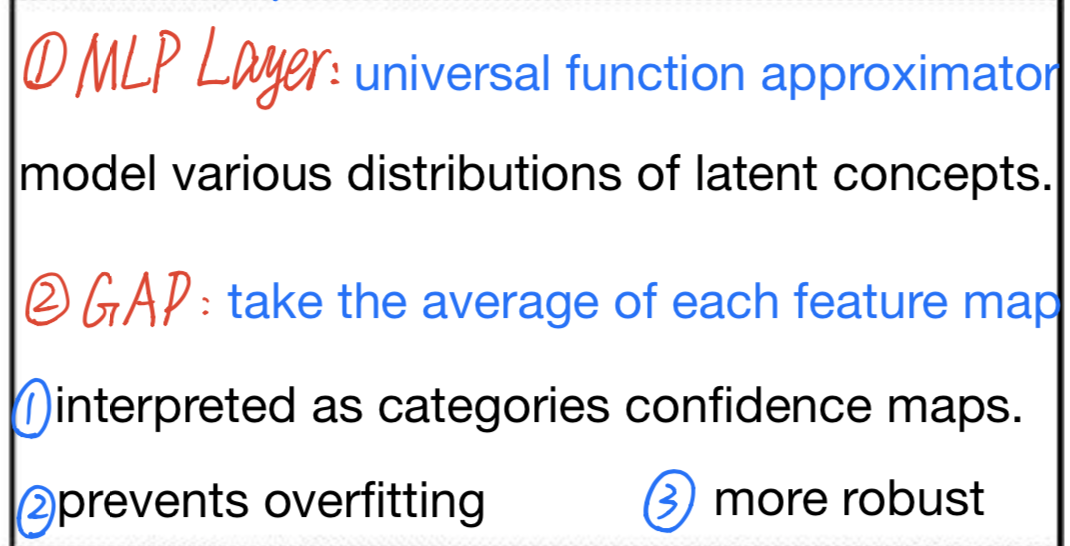

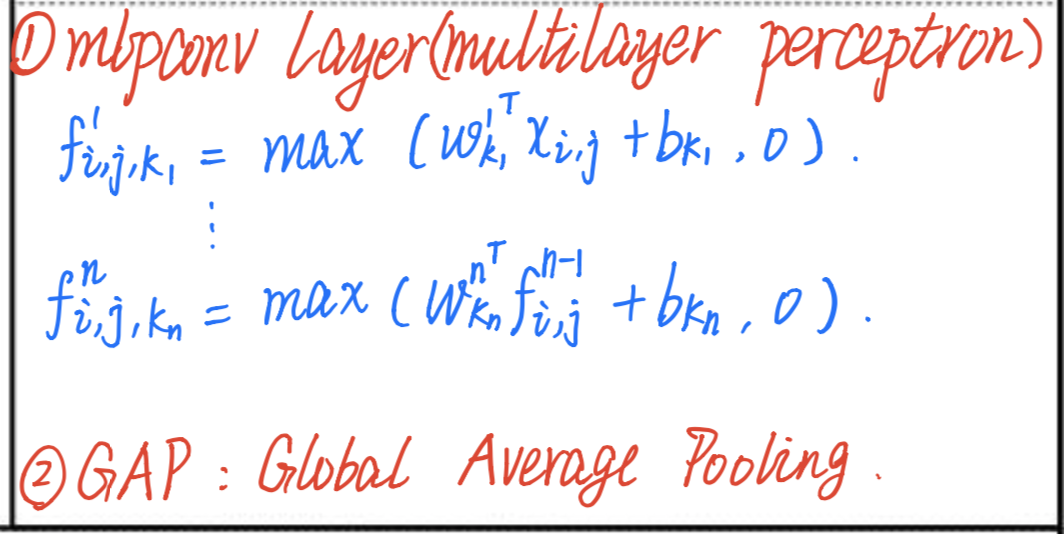

It includes MLP (multilayer perceotrn) layer and GAP (global average pooling).

Conceptual Understanding

It describes how does mlpconv works and what does GAP means.

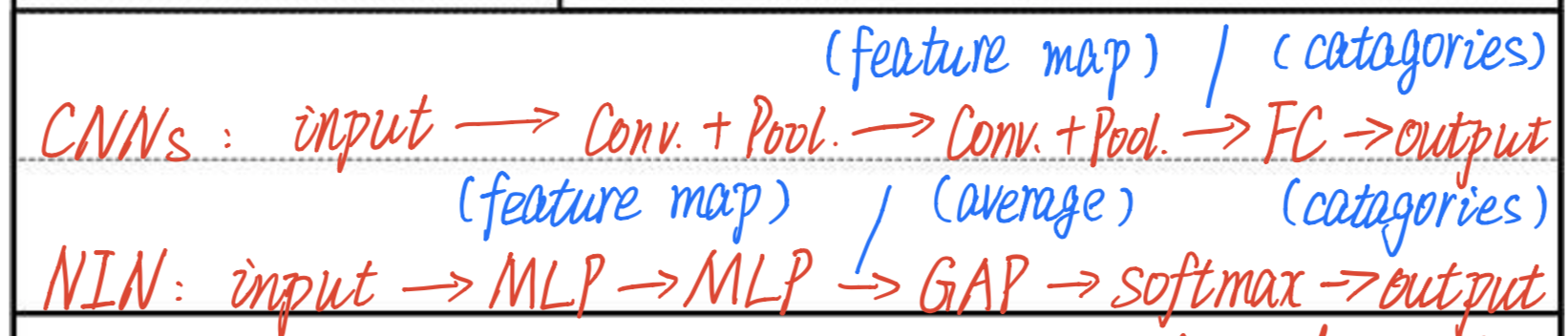

Core Conception

It denotes the

most importantconception of Network In Network (NIN) and explains the steps of traditional CNNs and novel NIN to classify images respectively.

Besides, the comparison shows below.

Experimental Results

datasets:

- CIFAR-10

- CIFAR-100

- SVHN

- MNIST

notes:

- fine-tuned local receptive field size and weight decay.

- using dropout.

Code

Model Detail

NIN( |

PyTorch Implementation

The complete code can be found in here.

The implementation of network as follows.

class NIN(nn.Module): |

TensorFlow Implementation

the code and more details can be found in [2].

Note

More details of mlpconv and cccp can be found in [3].

What the effect of 1 by 1 convolution kernel can be found in [4].

References

[1] Min Lin, Qiang Chen, and Shuicheng Yan. “Network in network.” arXiv preprint arXiv:1312.4400 (2013).

[2] Eugene. “NETWORK-IN-NETWORK IMPLEMENTATION USING TENSORFLOW.” https://embedai.wordpress.com/2017/07/23/network-in-network-implementation-using-tensorflow/

[3] Ou. “(Paper) Network Analysis of Network In Network.” https://blog.csdn.net/mounty_fsc/article/details/51746111

[4] ysu. “What is the role of 1 by 1 convolution kernel?” http://www.caffecn.cn/?/question/136